With the rise of GenAI, most of the enterprises want to use LLMs to enhance their productivity, by enabling their employees to grant them access to public LLM providers (like ChatGPT, Gemini, etc). Though this definitely improves the overall productivity of the employees in their routine operations, however this capability posses several threats to the enterprise as employees are sharing the intellectual property of the organization with these public entities, and thus opening a new avenue of data leakage threats.

What's LLMInspect

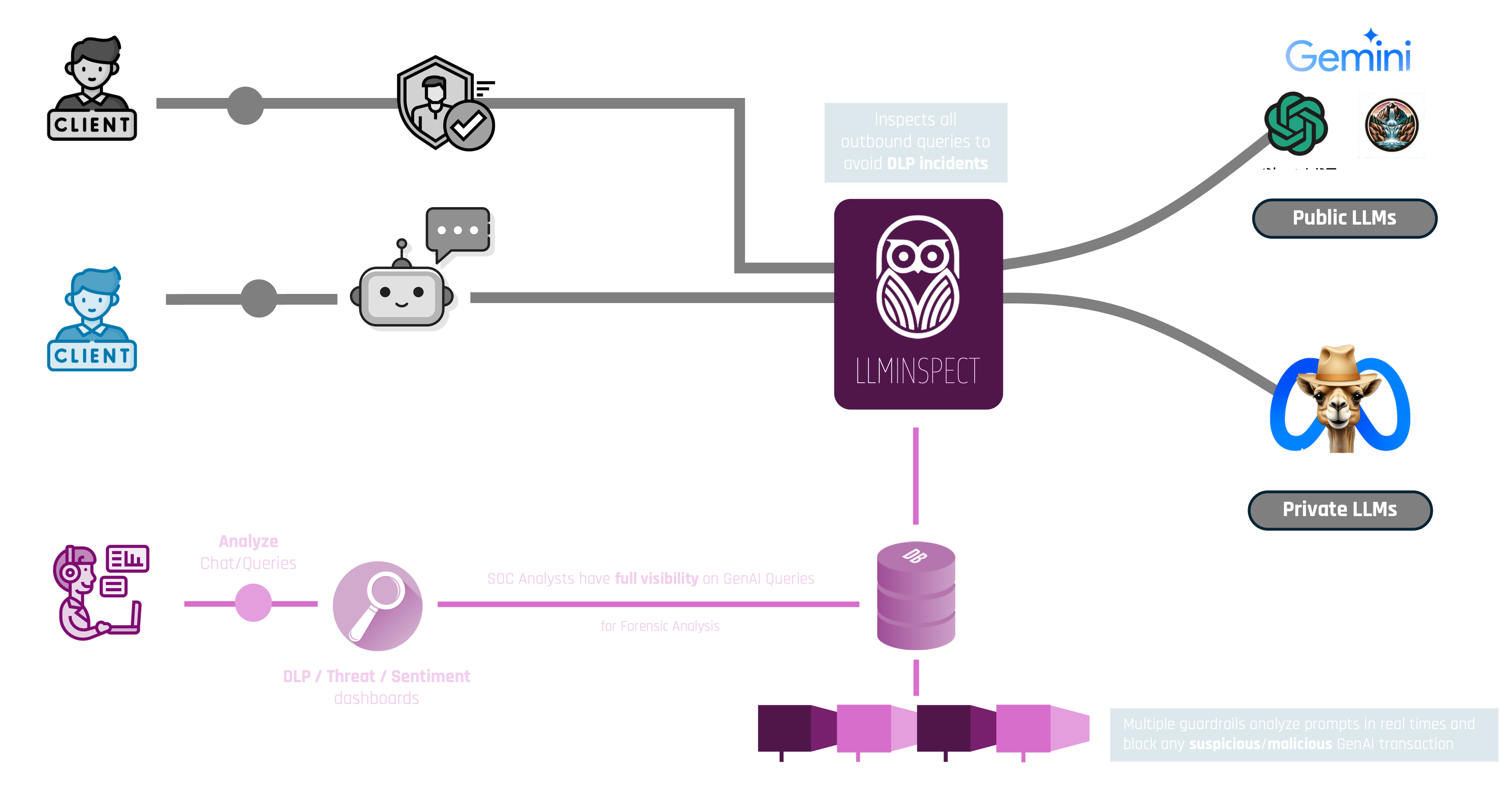

LLMINSPECT GenAI Gateway is a one stop solution to all those problems, as it massively enhances the enterprise AI experience and cyber safety. From one application interface, users can interact with different public and private LLM models, and control what is being sent to these models. Using LLMINSPECT, SOC Analysts can also view the employee GenAI usage and discover any LLM related Security Threats.

LLMInspect also brings very interesting capabilities to its users, e.g. rate limit the GenAI queries or even re-route external LLM queries to enterprise's private LLMs.

LLMInspect Features

- Simple GUI: ChatGPT like GUI including Light and Dark Modes

- Multimodal Chat: Upload and analyze images and leverage advanced agents with tools and API actions

- Multilingual UI: Support for multiple languages

- Model Selection: OpenAI, Azure, Anthropic, Google, and more

- Multi-User Support: Integrates with LDAP, Active Directory and OpenID

- Flexible Deployment: Proxy, Reverse Proxy, Docker, etc

- Searching: Search all Messages and conversations

- Export: Conversations in various formats (Markdown, JSON, etc)

Guardrails Library

LLMInspect guardrails refer to safety mechanisms or guidelines put in place to control, constrain, or guide the behavior of large language models like ChatGPT or Gemini, ensuring they are used responsibly and ethically. These guardrails help prevent harmful outputs, mitigate risks of misinformation, and ensure that interactions are safe, aligned with ethical standards, and useful.

- DenyList Guardail

- DenyRegex Guardail

- Negative Sentiment Guardail

- PII & BII Guardrail

- Secrets Guardrail

- Prompt Classifier Guardrail

- Unsafe Guardrail

- Business-Specific Guardrail(s)

Data Sanitization Strategy

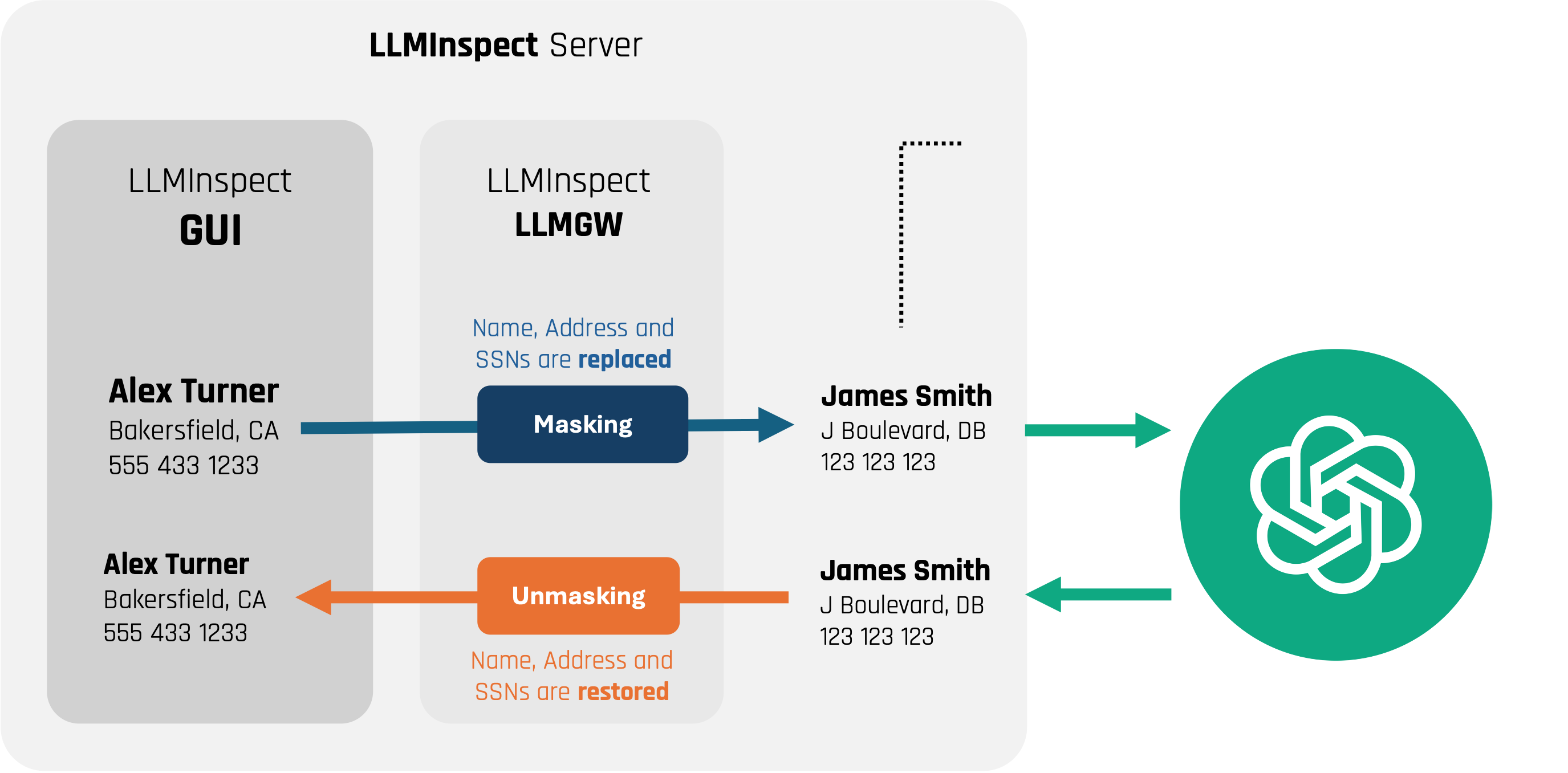

LLMInspect detects Personally Identifiable Information (PII) and Business Identifiable Information (BII) in user prompts and replaces it with fake aliases, ensuring that sensitive data is not exposed to the model, thus safeguarding enterprise data privacy.

All LLMInspect modules are fully instrumented for observability using OpenTelemetry.

The opentelemetry collectors and exporters generate extensive logs and traces from LLMInspect

to provide real-time visibility into how the LLM is being used.

The observability metrics help enterprises to improve GenAI capabilities,

enhance privacy/security, transparency and subsequently

business efficiency of enterprises using LLMInspect.